PIX4D API

Download OpenAPI specification:

This document describes how to get started with the PIX4Dengine Cloud REST API, which provides third-party applications access to the Pix4D cloud service. It allows a PIX4Dengine Cloud client to access his/her data on the cloud and perform a range of operations on them.

- For end-to-end security, HTTPS is used in all the APIs

- The base URL for API endpoints is: https://cloud.pix4d.com

- Each REST API call needs to be authenticated. See Authentication below

- The communication format is expected to be JSON, unless otherwise stated

To register the application and start using the PIX4Dengine Cloud REST API, you will need to acquire a PIX4Dengine Cloud license. Please contact us at https://www.pix4d.com/enterprise/#contact to request one.

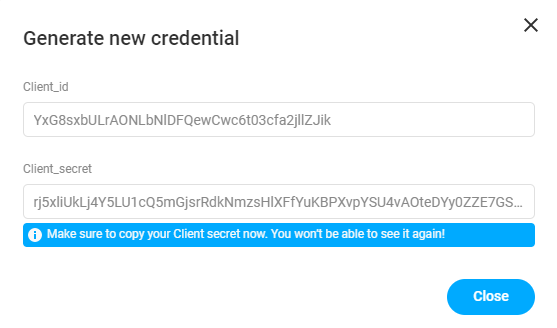

Once the license has been issued, a client_id/client_secret pair representing your application is needed. This pair will be used to authenticate.

Note that as its name suggests, client_secret is a password identifying the client application and should therefore be handled securely. In order to generate client_id/client_secret, please follow these steps:

Log in to https://cloud.pix4d.com with your Pix4D account.

Go to your Organization dashboard through https://account.pix4d.com, choosing your Organization and going to the Dashboard.

In the API access section, the list of existing keys is displayed (it will be empty the first time a user logs in):

- Click on Generate new API credentials and select the correct license:

- Click on Generate, and a new pair of credentials will be generated. Make sure they are copied, as they will not be displayed again:

In order to disable existing credentials, they have to be deleted by clicking on Delete.

Equipped with the API client_id/client_secret, the first step is to retrieve an authentication token. An authentication token identifies both the application connecting to the API and the Pix4D user this application is connecting on behalf of.

This token must then be passed along with every single request that the application makes to the API. It is passed in the HTTP Authorization header, like so:

Authorization: Bearer <ACCESS_TOKEN>

The PIX4Dengine Cloud REST API uses OAuth 2.0, the industry standard for connecting apps and accounts. OAuth 2.0 supports several "authentication flows" to retrieve an authentication token. Pix4D supports several of them that are each used for a specific purpose, but for PIX4Dengine Cloud customers, only one is relevant: "Client Credentials"

Using this flow, an API client application can get access to its own Pix4D user account (and only that account). With this method, authentication is straightforward and only requires the application's client_id/client_secret pair.

The client must send the following HTTP POST request to https://cloud.pix4d.com/oauth2/token/ with a payload containing:

grant_type: client_credentialsclient_id: the client ID of the application that was given to you by your Pix4D contactclient_secret: the client_secret of the application that was given to you by your Pix4D contacttoken_format: jwt

example:

curl --request POST \

-d "grant_type=client_credentials&token_format=jwt&client_id=YuB7fu…&client_secret=GMSVvt8dF…" \

https://cloud.pix4d.com/oauth2/token/

Token content

The response you receive when performing the authentication request above has the following content (In JSON):

access_token: the token value that you will have to join in all your requeststoken_type: for Pix4D, this is always a Bearer tokenexpires_in: the number of seconds the token is valid for. After this number of seconds, API requests using this token will get rejected, and you will need to request a new token through the authentication procedure againscope: describe what the token is valid for. In this case, it's always "read" and "write" since you get full access to your own account

{

"access_token": "<ACCESS_TOKEN>",

"expires_in": 172800,

"token_type": "Bearer",

"scope": "read:cloud write:cloud"

}

Refreshing the token

When the token expires, you simply need to perform the above authentication procedure again to get a fresh token.

In this guide, you will discover how to get a token, upload a new project, get it processed, and access the results.

API Access

You need a PIX4Dengine Cloud license to authenticate and get access to the API. Please contact your Pix4D reseller to start a trial if you don't have a license already. More information is available on PIX4Dengine Cloud on our product page.

With this license comes your authentication information, Client ID, and Client Secret Key, which are the two values you need in this guide.

Terminal tooling

This guide can be completed from the Terminal using basic tooling:

curlcommand line is used to call the API; it should be included in your OS or docker imageaws-cliis used to upload and download the data; you can get it directly from AWS: https://aws.amazon.com/cli/

Photos to process

We will use a set of photos to be processed in this guide. If you don't have a good dataset at your disposal, feel free to download one of our sample datasets, for example, the building dataset. Select "Download" then "Input Images" from the UI. We consider you are unzipping the archive in ./photos in this guide.

We are using the OAuth2 Client Credentials flow to generate an access token. Using the Client ID and Client Secret provided with your PIX4Dengine Cloud license, you can get an Access Token using the following curl command:

export PIX4D_CLIENT_ID=__YOUR_CLIENT_ID__

export PIX4D_CLIENT_SECRET=__YOUR_CLIENT_SECRET_KEY__

curl --request POST \

--url https://cloud.pix4d.com/oauth2/token/ \

--form client_id=$PIX4D_CLIENT_ID \

--form client_secret=$PIX4D_CLIENT_SECRET \

--form grant_type=client_credentials \

--form token_type=access_token \

--form token_format=jwt

The response body is a JSON document containing an access_token attribute:

{

"access_token": "<__PIX4D_ACCESS_TOKEN__>",

"expires_in": 172800,

"token_type": "Bearer",

"scope": "read:cloud write:cloud"

}

For the following example, we will reference the token as PIX4D_ACCESS_TOKEN.

export PIX4D_ACCESS_TOKEN=__PIX4D_ACCESS_TOKEN__

You can learn more about authentication in the reference documentation (see Authentication).

Let's start by creating a project. The only required parameter is the project name, which can be passed as a JSON payload in the request body.

curl --request POST \

--url https://cloud.pix4d.com/project/api/v3/projects/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header "Content-Type: application/json" \

--data '{"name": "My first project"}'

The response will be a 201 CREATED and the response body will include the project details. Please keep note of the project id and important AWS S3 properties that we will use later.

This is an extract of the interesting JSON properties

{

"id": 877866,

"bucket_name": "prod-pix4d-cloud-default",

"s3_base_path": "user-123123123121312/project-877866"

}

export PROJECT_ID=<THE PROJECT ID>

export S3_BUCKET=prod-pix4d-cloud-default

export S3_BASE_PATH="user-123123123121312/project-877866"

It is recommended to use the shell AWS CLI or the python boto3 library but other tools can work as well. First, we need to retrieve the AWS S3 credentials associated with this project:

curl --url https://cloud.pix4d.com/project/api/v3/projects/$PROJECT_ID/s3_credentials/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header "Content-Type: application/json"

The answer contains all the S3 information we need, at least the access_key, secret_key, and the session_token.

{

"access_key": "ASIATOCJLBKSU2CVJIHR",

"secret_key": "5OGGBSvn8Sesdu8l...<remainder of secret key>",

"session_token": "FwoGZXIvYX...<remainder of security token>",

"expiration": "2021-05-10T21:55:47Z",

"bucket": "prod-pix4d-cloud-default",

"key": "user-199a56ab-7ac6-d6d1-4778-5b4d338fc9de/project-883349",

"server_time": "2021-05-19T09:55:47.357641+00:00",

"region": "us-east-1",

"is_bucket_accelerated": true,

"endpoint_override": "s3-accelerate.amazonaws.com"

}

We can store the S3 credentials in our environment so that they will get picked up by the AWS CLI tool.

export AWS_ACCESS_KEY_ID=ASIATOCJLBKSU2CVJIHR

export AWS_SECRET_ACCESS_KEY='5OGGBSvn8Sesdu8l...<remainder of secret key>'

export AWS_SESSION_TOKEN='FwoGZXIvYX...<remainder of security token>'

export AWS_REGION='us-east-1'

export AWS_S3_USE_ACCELERATE_ENDPOINT=true

Make sure to prefix all your desired destination locations with the path returned in the credentials call. This is the only place for which write access is granted. Make sure that the files are proper images and their names include an extension supported by Pix4D.

Provided your images are located in a folder located at $HOME/images, and that it contains only images, you can run the command:

aws s3 cp ./images/ "s3://${S3_BUCKET}/${S3_BASE_PATH}/" --recursive

If your folder contains multiple images, you need to upload them one by one, which may require the use of a script.

aws s3 cp $HOME/images/ "s3://${S3_BUCKET}/${S3_BASE_PATH}/" --recursive

You then need to register the images in the PIX4Dengine Cloud API so that they will be processed. It is possible to upload files that are not project inputs, and it is not possible to know which files are meant as input. It is therefore required to register each S3 uploaded file in the API. Register the uploaded files you uploaded (single API call):

curl --request POST --url https://cloud.pix4d.com/project/api/v3/projects/$PROJECT_ID/inputs/bulk_register/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header "Content-Type: application/json" \

--data "{

\"input_file_keys\": [

\"${S3_BASE_PATH}/P0350035.JPG\",

\"${S3_BASE_PATH}/P0360036.JPG\",

\"${S3_BASE_PATH}/P0370037.JPG\",

\"${S3_BASE_PATH}/P0380038.JPG\",

\"${S3_BASE_PATH}/P0390039.JPG\",

\"${S3_BASE_PATH}/P0400040.JPG\",

\"${S3_BASE_PATH}/P0410041.JPG\",

\"${S3_BASE_PATH}/P0420042.JPG\",

\"${S3_BASE_PATH}/P0430043.JPG\",

\"${S3_BASE_PATH}/P0440044.JPG\"

]

}"

The response should confirm that the various images have been registered amongst other data.

{ "nb_images_registered": 10 }

You are now ready to start processing your project:

curl --request POST --url https://cloud.pix4d.com/project/api/v3/projects/$PROJECT_ID/start_processing/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header "Content-Type: application/json"

curl --url https://cloud.pix4d.com/project/api/v3/projects/$PROJECT_ID/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header "Content-Type: application/json"

A response can look like:

{

"id": 883349,

"name": "My first project",

"display_name": "My first project",

"project_group_id": null,

"is_demo": false,

"is_geolocalized": true,

"create_date": "2021-05-19T11:53:39.504849+02:00",

"public_status": "PROCESSING",

"display_detailed_status": "Waiting for processing",

"error_reason": "",

"user_display_name": "Jhon Doe",

"project_thumb": "<__URL__>",

"detail_url": "https://cloud.pix4d.com/project/api/v3/projects/883349/",

"acquisition_date": "2021-05-19T11:53:38.973075+02:00",

"project_type": "pro",

"image_count": 10,

"last_datetime_processing_started": "2021-05-19T10:58:10.514800Z",

"last_datetime_processing_ended": null,

"bucket_name": "prod-pix4d-cloud-default",

"s3_bucket_region": "us-east-1",

"s3_base_path": "user-188a56ab-7ac6-d6d1-4778-5b4d338fc9de/project-883349",

"never_delete": false,

"under_trial": false,

"uuid": "239ae97821d54f98975bc0afa2fcc72f",

"coordinate_system": "",

"outputs": {

"mesh": { "texture_res": {} },

"images": {

"project_thumb": {

"status": "processed",

"name": "project_thumb.jpg",

"s3_key": "user-188a56ab-7ac6-d6d1-4778-5b4d338fc9de/project-883349/thumb/project_thumb.jpg",

"s3_bucket": "prod-pix4d-cloud-default"

},

"reflectance": {}

},

"map": { "layers": {}, "bounds": { "sw": [0, 0], "ne": [0, 0] } },

"bundles": {

"inputs": {

"status": "requestable",

"request_url": "https://cloud.pix4d.com/project/api/v3/projects/883349/inputs/zip/"

}

},

"reports": {}

},

"min_zoom": -1,

"max_zoom": -1,

"proj_pipeline": ""

}

Once the status changes from PROCESSING to DONE, the project's main outputs are ready to be retrieved.

Once the project is processed, by querying its details, it is possible to:

- Get its PIX4Dcloud visualization page

- Get its PIX4Dcloud public page link (read-only)

- Get any asset that was produced (in the form of an S3 link to the asset to be downloaded)

This guide describes all of the different ways to process a project with PIX4Dengine Cloud API. Once the project has been created, in order to process it, the following endpoint must be called:

POST on https://cloud.pix4d.com/project/api/v3/projects/{id}/start_processing/ See the full documentation for this endpoint.

The body request includes:

{

"tags": ["string"]

}

Depending on the type of processing, not all of the parameters in the request body are required. There are different types of processing:

- Nadir images (3d-maps)

- Flat terrain

- Oblique images

Unless explicitly specified, processing types are mutually exclusive.

- Mobile processing

- Building reconstruction projects

A faster processing pipeline for nadir datasets with the newest algorithms that yields better results and has better management of coordinate systems. It supports vertical coordinate systems over an ellipsoid, geoid model, or user-defined constant geoid undulation. This pipeline also produces a 3D mesh with improved visualization in the PIX4Dcloud viewer.

This is the default pipeline if nothing is specified.

It can produce the following outputs:

- Orthophoto

- DSM

- Point cloud

- 3D mesh

Similarly to the other pipelines, it can be selected by using nadir (or equivalently 3d-maps) in the tags parameter of the processing options payload:

{

"tags": [

"nadir"

]

}

Flat terrain

Enable specific settings for a particularly flat scene, such as a field with few to no vertical structures, such as trees or buildings. To enable those settings, use the flat tag in addition to the above nadir one in the tags parameters of the processing options payload:

{

"tags": [

"nadir",

"flat"

]

}

Note that this tag is only valid for nadir processing pipelines (meaning together with nadir or 3d-maps tags), oblique nor building processing pipelines.

A faster processing pipeline for oblique datasets with the newest algorithms that yields better results and has better management of coordinate system (e.g. it supports vertical coordinate systems: ellipsoidal, geoid or user-defined constant geoid undulation). This pipeline also produces a 3D mesh with improved visualization in the PIX4Dcloud viewer.

It can produce the following outputs:

- Orthophoto

- DSM

- Point cloud

- 3D mesh

Similarly to the other pipelines, it can be selected by using oblique in the tags parameter of the processing options payload:

{

"tags": [

"oblique"

]

}

The API provides a third processing pipeline for images (and depth data optionally) captured by the PIX4Dcatch. This photogrammetric pipeline is optimized for this type of data and produces better and faster results. It can be used with images, or if your mobile device is equipped with a LiDAR scanner, the pipeline will use both images and depth data in the process. The outputs generated after processing are Orthophoto, DSM, point cloud, and 3D mesh. This pipeline is automatically selected when using images captured with Pix4Dcatch.

The LiDAR scanner captures depth information during the image acquisition. These LiDAR points will compensate for the lack of 3D points over reflective and low-texture surfaces.

More information on combining photogrammetry and LiDAR can be found in this article: https://www.pix4d.com/blog/lidar-photogrammetry

For images captured from oblique flights around targets with featureless facades, such as walls of a uniform color and texture, the building reconstruction pipeline can provide higher-quality results than standard processing would otherwise.

This pipeline can be selected by passing building in the tags parameter:

{

"tags": [

"building"

]

}

Limitations

- Only RGB cameras and nadir flights are supported. See the full list of supported cameras

- The outputs generated are: Orthophoto, DSM, and log file (no point cloud or 3D mesh is generated)

- It is not possible to define specific processing options. The computation will always use the default parameters and will produce an ortho and DSM with a default resolution

- If the pipeline is used in areas with a lot of height changes (urban areas for example), artifacts in the ortho might appear

In the event of processing failure, an error code* and reason are given in the project details API response. Error codes are intended to be machine-readable, while error reasons are human-readable messages to aid in debugging.

The following table describes the possible error codes and their corresponding reasons:

| Code | Reason | Potential Mitigation |

|---|---|---|

| 10001 | An unexpected error occurred. | |

| 10002 | Processing exceeded allotted time. | |

| 10003 | Processing exceeded available resources. | |

| 10101 | Failed to create cameras. More information will be available in the processing log. | Refer to the processing log for additional details. |

| 10201 | Failed to calibrate a sufficient number of cameras. | Verify the image quality and overlap. |

| 10402 | Point cloud generation failed. | Verify the image quality and overlap. |

| 10410 | Processing failed. | |

| 10411 | Failed to densify sparse point cloud. | Verify the quality of the calibration. |

| 10501 | 3D textured mesh generation failed. | Ensure that the dense point cloud consists of a single block. |

| 10601 | The input data is not valid. | |

| 10611 | The selected output CRS is not isometric. | Use a valid isometric CRS. |

| 10612 | The selected output CRS is not projected or arbitrary. | Use a valid projected or arbitrary CRS. |

| 10613 | An output CRS cannot be defined without a horizontal CRS component. | Use a valid CRS with an horizontal component. |

| 10614 | A geoid model or geoid height cannot be specified without a CRS vertical component. | Remove the geoid model or add a vertical component to the CRS. |

| 10615 | A geoid height cannot be specified with a geoid model. | Remove the geoid height or add a geoid model to the CRS. |

Here we will guide you through creating a "Site" to group Projects into a timeline view.

The primary use case for this is to group Projects of the same location, created over a time period, for example to track a building construction project.

In the Cloud Frontend you can compare these projects easily to see the differences between any of the processed assets in both 2D and 3D views.

POST on *https://cloud.pix4d.com/project/api/v3/project-groups/

specifying a name and setting the project_group_type to bim.

curl --request POST --url https://cloud.pix4d.com/project/api/v3/project_groups/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header "Content-Type: application/json"

--data '{"name": "Construction site 123", "project_group_type": "bim"}`

This will return the information about the Site (ProjectGroup) you have created, including it's id.

{

"name": "Construction site 123",

"id": 112233,

...

}

To assign a Project to a Site you can PUT the project into the project_group (a.k.a Site) using

the move_batch endpoint. You will need to supply the Organization's uuid in the owner_uuid field.

Note: using the PATCH method on the Project detail endpoint (project/api/v3/projects/<id>/) to move projects

is deprecated and will be removed at some point in Q3 2025.

curl --request PUT \

--url https://cloud.pix4d.com/common/api/v4/drive/move_batch/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header 'content-type: application/json' \

--data '{

"owner_uuid": "58101312-de35-4a22-a951-943eba20a041",

"source_nodes": [

{

"type": "project",

"uuid": "0c26579b-f2ad-4dfb-a253-a4c43dbbbf53"

}

],

"target_type": "project_group",

"target_uuid": "50df7f65-f480-4fcc-9f86-32d8d7724689"

}'

To un-assign a Project from a Site you can PUT the project into the organization (or even a folder) using

the move_batch endpoint. You will need to supply the Organization's uuid in the owner_uuid field.

Note: using the PATCH method on the Project detail endpoint (project/api/v3/projects/<id>/) to move projects

is deprecated and will be removed at some point in Q3 2025.

curl --request PUT \

--url https://cloud.pix4d.com/common/api/v4/drive/move_batch/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header 'content-type: application/json' \

--data '{

"owner_uuid": "58101312-de35-4a22-a951-943eba20a041",

"source_nodes": [

{

"type": "project",

"uuid": "0c26579b-f2ad-4dfb-a253-a4c43dbbbf53"

}

],

"target_type": "organization",

"target_uuid": "58101312-de35-4a22-a951-943eba20a041"

}'

The images which are uploaded for processing can have two different coordinate systems:

- WGS84

The image tags include latitude, longitude and ellipsoidal height with respect to WGS84 and will be taken automatically by the software. In that case, the input coordinate system is set to WGS84.

- Arbitrary

The images do not have any geolocation and then the input coordinate system is set to arbitrary.

Any other input coordinate system is not supported.

It is the coordinate system to which the outputs will refer to.

Default output coordinate system

When nothing is specified, the output coordinate system is set up by default:

If the input coordinate system is WGS84, the default output coordinate system will be WGS84 / UTM XX. XX or the UTM zone depends on the position of the images

If the input coordinate system is arbitrary, the output coordinate system will also be arbitrary

User-defined output coordinate system

It is possible to define an output coordinate system when the project is created:

POST on https://cloud.pix4d.com/project/api/v3/projects/

One of the parameters of the request body is coordinate_system which can be either:

- a full WKT string representing the coordinate system (can be an arbitrary one)

- a valid EPSG code, given in the format EPSG:2056 (only projected coordinate systems are supported)

- In order to get the result in an arbitrary system, the WKT string can include either:

- the value ARBITRARY_METERS that will produce the result in an arbitrary default coordinate system in meters

- the value ARBITRARY_FEET that will produce the result in an arbitrary default coordinate system in feet

- the value ARBITRARY_US_FEET that will produce the result in an arbitrary default coordinate system in US survey feet

{

"coordinate_system": "string"

}

- Vertical Input coordinate system

The vertical input coordinate system will be ellipsoidal

- Vertical output coordinate system

The vertical output coordinate system will always be EGM 96 Geoid.

spatialreference.org hosts a database of EPSG registered coordinate systems which should cover most needs related to horizontal CS. For example, to select the Swiss coordinate system CH1903, one would search for it on the database and export in OGC WKT format to get the WKT string as expected by PIX4D:

PROJCS["CH1903 / LV03",

GEOGCS["CH1903",

DATUM["CH1903",

SPHEROID["Bessel 1841",6377397.155,299.1528128,

AUTHORITY["EPSG","7004"]],

AUTHORITY["EPSG","6149"]],

PRIMEM["Greenwich",0,

AUTHORITY["EPSG","8901"]],

UNIT["degree",0.0174532925199433,

AUTHORITY["EPSG","9122"]],

AUTHORITY["EPSG","4149"]],

PROJECTION["Hotine_Oblique_Mercator_Azimuth_Center"],

PARAMETER["latitude_of_center",46.9524055555556],

PARAMETER["longitude_of_center",7.43958333333333],

PARAMETER["azimuth",90],

PARAMETER["rectified_grid_angle",90],

PARAMETER["scale_factor",1],

PARAMETER["false_easting",600000],

PARAMETER["false_northing",200000],

UNIT["metre",1,

AUTHORITY["EPSG","9001"]],

AXIS["Easting",EAST],

AXIS["Northing",NORTH],

AUTHORITY["EPSG","21781"]]

Coordinate systems must comply with the WKT standards:

- Geographic horizontal system e.g. WGS84, in this case the WKT starts with GEOGCS

- Projected horizontal system e.g. WGS84 / UTM zone 32, in this case the WKT starts with PROJCS

- Arbitrary horizontal system, in this case the WKT starts with LOCAL_CS

Learn how to use Ground Control Points (GCP) or Manual Tie Points (MTP) and Check Points in the computation.

A Ground Control Point (GCP) is a characteristic point which coordinates are known. The coordinates have been measured with traditional surveying methods or have been obtained by other sources (LiDAR, older maps of the area, Web Map Service). GCPs are used to georeference a project and reduce the noise.

A Manual Tie Point (MTP) is a characteristic point for which the coordinates are not known, but which is visible and accurately identifiable from several images, e.g. the corner of a wall. This is used to help the photogrammetry process to join the images in the scene.

Ground Control Points (GCPs)

The use of GCPs is possible with all PIX4D engines.

Once the project has been created and before processing it, it is possible to pass GCP coordinates which will be used in the computation:

POST on /project/api/v3/projects/{id}/gcp/register ({id} is the project ID)

Request body is as follows:

{

"gcps": [

{

"name": "GCP_123",

"point_type": "CHECKPOINT",

"x": 1.23,

"y": 45.2,

"z": 445.87,

"xy_accuracy": 0.02,

"z_accuracy": 0.02

},

{...}

]

}

- "name": It must be unique for each of the points

- "point_type": It can be either "CHECKPOINT" or "GCP". This article explains the difference between both

- "x","y","z": Coordinates of the point. They have to refer to the output coordinate system of the project and in the same units as the output coordinate system. Geographical coordinates are not supported

- "xy_accuracy","z_accuracy": The planimetric and altimetric accuracy of the GCPs or Check Points

Important

The coordinates of a GCP are:

- "x" : Coordinate in East direction (or West in some specific coordinate systems as in the example below)

- "y" : Coordinate in North direction (or South in some specific coordinate systems as in the example below)

- "z" : The altitude with respect to the ellipsoid of the geoid

Although most coordinate system are defined as above, there are some cases where the orientations of the axes are different. As an example, a coordinate system in Japan and another one in South Africa:

- JGD2011 / Japan Plane Rectangular CS VI : The "x" coordinate is pointing to the North and the "y" is pointing to the East. In this case, the x,y coordinates have to be flipped so that the "x" in the body requests points to the East and the "y" points to North.

- Cape / Lo17 : The "x" coordinate is pointing to the South and the "y" is pointing to the West. In this case, the x,y coordinates have to be flipped so that the "x" in the body requets points to the West and the "y" points to South.

More information about GCPs

Manual Tie Points (MTPs)

MTPs can be created similarly to GCPs, but omitting the georeferencing, and through a different endpoint:

POST on /project/api/v3/projects/{id}/mtp/ ({id} is the project ID)

Request body is as follows:

{

"mtps": [

{

"name": "MTP_456",

"is_checkpoint": false,

},

{...}

]

}

- "name": It must be unique for each of the points in the project.

- "is_checkpoint": Similar to the GCP

point_type. This article explains the difference between both.

GCP and MTP Marks

Once the GCP or MTP data has been registered, it is necessary to also pass the marks of each GCP/MTP, in other words the pixel coordinates of the GCPs/MTPs in each of the images.

For GCP and MTP Marks the gcp field is used for the name of the GCP or MTP.

POST on /project/api/v3/projects/{id}/mark/register/ ({id} is the project ID)

Request body is as follows:

{

"marks": [

{

"gcp": "GCP_123",

"photo": "user-123/project-354/my_file.jpg",

"x": 1.23,

"y": 45.2,

},

{...}

]

}

- "gcp": Name of the GCP/MTP registered in the previous step

- "photo" : Photo

s3_keywhere the GCP/MTP has been marked (each GCP/MTP must be marked in at least two photos) - "x" and "y" : Pixel coordinates (units are pixels) of the mark in the Photo

In order to mark the GCP/MTP in the images, it is possible to use PIX4Dmatic or other third party applications.

This guide describes the specific case of using Pix4D AutoGCP to automatically mark GCPs and process a project with PIX4Dengine Cloud API.

This allows you to simply upload GCP coordinates and images, and the system will take care of marking GCP targets in the images. If you have manually generated the marks data then see this article on how to use that data directly.

Creating the project and uploading images is the same as in other examples.

For information on how to optimally set out your marks on your survey site see this article.

This is the same as in other examples.

You must also set the coordinate_system when creating the project.

This should be the same coordinate system as your GCPs.

This is as described in the GCPs

Call the start processing endpoint as with all processing.

Processing with AutoGCP will take some extra time, due to the extra compute required to analyse and mark the GCPs on the input images.

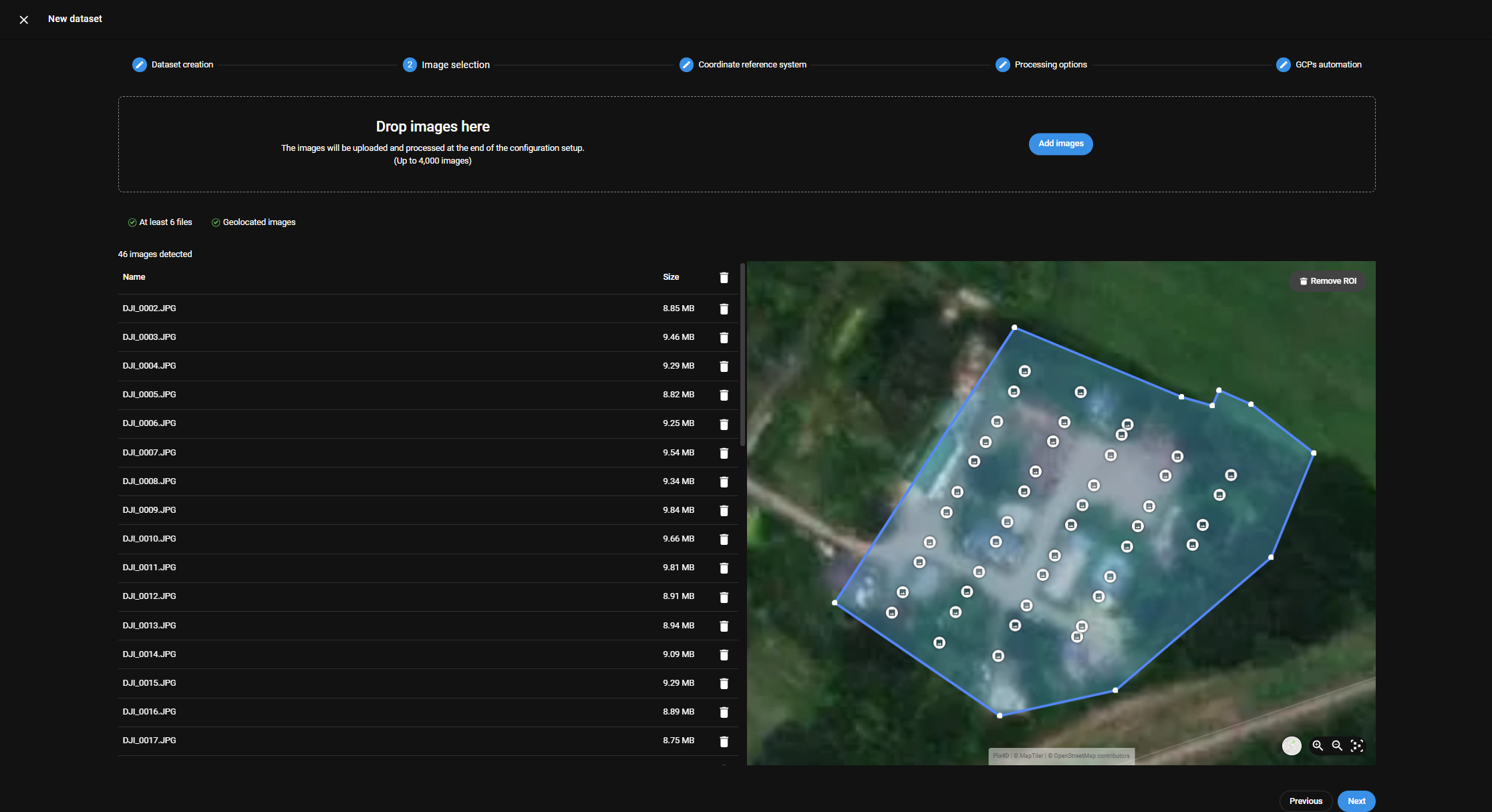

This feature allows the user to define a region of interest, which means no reconstructions will be created outside the defined area when processing a project with PIX4Dengine Cloud API.

Once the project has been created, in order to define the region of interest, the following endpoint must be called:

POST on https://cloud.pix4d.com/project/api/v3/projects/{id}/processing_options/ See the full documentation for this endpoint.

The body request includes:

{

"area": {

"plane": {

"vertices3d": [

[

"float",

"float",

"float"

],

[

"float",

"float",

"float"

],

[

"float",

"float",

"float"

],

[

"float",

"float",

"float"

]

],

"outer_boundary": [

"int",

"int",

"int",

"int"

],

},

"thickness": "float"

}

}

The only required field to set a region of interest is the plane, which consists of:

- The

vertices3ddefines a list of 3D locations in WGS 84. For now, the altitude orzvalue of the location is not considered and the areas defined will be applied only in the 2D plane. - The

outer_boundarydefines the order each location stored invertices3dmust be considered when drawing the area.

There is also an optional field available inside plane named inner_boundaries to define areas inside the main area (defined with the outer_boundary) to be excluded from processing.

Finally, the thickness field is defined as a limit distance from the plane in the normal direction. If not specified, it is assumed to be infinite (usual case when limiting the region of interest).

- Standard region of interest

{

"area": {

"plane": {

"vertices3d": [

[

3.248295746230309,

43.415212850276255,

0

],

[

3.2484144251306066,

43.41525557252694,

0

],

[

3.248465887662594,

43.415162880462645,

0

],

[

3.2483382815883806,

43.415126642785765,

0

]

],

"outer_boundary": [

0,

1,

2,

3

]

}

}

}

- Region of interest with inner excluded zones

{

"area": {

"plane": {

"vertices3d": [

[

3.248295746230309,

43.415212850276255,

0

],

[

3.2484144251306066,

43.41525557252694,

0

],

[

3.248465887662594,

43.415162880462645,

0

],

[

3.2483382815883806,

43.415126642785765,

0

],

[

3.248345633378664,

43.415194540731015,

0

],

[

3.248360336959232,

43.415163261911765,

0

],

[

3.248417050769994,

43.41518500450734,

0

]

],

"outer_boundary": [

0,

1,

2,

3

],

"inner_boundaries": [

[

4,

5,

6

]

]

}

}

}

A volume can be computed for a given project available on PIX4Dcloud and it is computed between a base surface boundary and the terrain surface.

The base surface boundary is given as a set of vertex coordinates and defines the base plane for the volume calculation.

Before running this computation, make sure that:

- Project is in a

PROCESSEDstate - DSM exists for the project

To compute the volume, send a POST request to https://api.webgis.pix4d.com/v1/project/{id}/volumes/.

The payload body fields are contained in the list below.

The payload must be in the same coordinate system and the same units as the project.

| Parameter | Type |

|---|---|

| base_surface | String |

| coordinates | Array[Array[Number]] |

| custom_elevation | Number |

Base surface

This parameter allows to select the base plane for the volume calculation. Accepted values are:

- average

- custom

- fitPlane

- triangulated

- highest

- lowest

When using custom, the custom_elevation parameter is required (see below).

More information about the different base surfaces at Menu View > Volumes > Sidebar > Objects

Coordinates

Each set of coordinates refers to a vertex of the boundary and they must be given with respect to the output project coordinate system and in the same units as the projects (meters, feet or US foot).

custom_elevation

Optional. The elevation MUST be provided when the base_surface is set to custom. If custom_elevation is provided, only X,Y vertex coordinates are needed, the Z is the custom_elevation.

units

Optional. If not provided, the preferred units will be used to calculate the volume

Accepted values are:

m(Metres)yd(Yards)ydUS(US Survey Yards)

The response parameters will be in the same coordinate system and same units and the same units as the project. If the project is in meters, the volume will be computed in m³. If the project is in feet or US foot, the volume will be computed in yd³.

| Parameter | Type |

|---|---|

| cut | Number |

| cut_error | Number |

| fill | Number |

| fill_error | Number |

cut

The volume that is above the volume base. The volume is measured between the volume base and the surface defined by the DSM.

cut_error

Error estimation of the cut volume.

fill

The volume that is below the volume base. The volume is measured between the volume base and the surface defined by the DSM.

fill_error

Error estimation of the fill volume.

HTTP status code 200 - OK

If the request is successful, the body includes volumes and error estimations. The order of the response body fields doesn’t matter and is not guaranteed.

{

"cut": Number,

"cut_error": Number,

"fill": Number,

"fill_error": Number

}

HTTP status code 504 - Gateway Timeout

{

"message": "Network error communicating with endpoint"

}

| Cause | Likely a networking issue either in the API Gateway, or any server that failed to route the request properly to the next point |

| Solution | Resend the request |

{

"message": "Endpoint request timed out"

}

| Cause | The request took more than 29 seconds to compute. One of the reasons might be that too many vertices to describe the polygon boundary are sent |

| Solution | Reduce the number of the polygon vertices |

HTTP status code 404 - Not found

{

"title": "404 Not Found"

}

| Cause | The project doesn’t exist |

| Solution | Check that the project exists |

HTTP status code 400 - Bad request

{

"title": "No COG DSM found for this project"

}

| Cause | The project doesn’t have a DSM. There may also be a delay between registering a DSM and the volume calculation being available, as PIX4Dcloud creates the COG DSM |

| Solution | Check that the project has a DSM. Allow some time for the system to register the DSM and create the COG DSM |

{

"title": "The polygon doesn't overlap the dataset"

}

| Cause | The vertices of the defined polygon lie outside the DSM |

{

"title": "Invalid Polygon"

}

| Cause | The interpolation of elevations inside the polygon boundary failed |

{

"title": "Too much data requested"

}

| Cause | The DSM doesn’t have a high enough overview level, as a result it is not possible to extract the maximum amount of data that is defined by the API |

| Solution | A possible solution could be to reduce the size of the area for which the volume needs to be computed. If it is not a suitable solution or the issue persists contact the Support |

For a complete demonstration of a volume computation, see this Jupiter Notebook.

The following examples show the request body to POST to https://api.webgis.pix4d.com/v1/project/{id}/volumes/

Calculate a volume with a triangulated base surface

{

"base_surface": "triangulated",

"coordinates": [

[328726.692, 4688271.030, 159.725],

[328728.351, 4688298.208, 150.376],

[328750.527, 4688289.860, 150.250],

[328744.430, 4688271.645, 150.056]

]

}

Calculate a volume with a custom base surface

{

"base_surface": "triangulated",

"coordinates": [

[419430.327, 3469059.806],

[419429.338, 3469056.812],

[419431.319, 3469060.816],

[419433.329, 3469058.803]

],

"custom_elevation": 40.52

}

Note: The coordinates of the vertices are given with respect to the output project coordinate system and in the same units as the projects (meters, feet or US foot).

Use PIX4Dcatch to capture your scene.

Once the capture is complete, select "Export all data" to generate the ZIP file.

Create your project

This is the same as in other examples.

Upload the ZIP file

Instead of uploading images, upload the ZIP file exported from PIX4Dcatch to S3.

aws s3 cp inputs.zip "s3://${S3_BUCKET}/${S3_BASE_PATH}/"

Then, register the ZIP file in place of the images.

curl --request POST \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header "Content-Type: application/json" \

--data \

"{

\"input_file_keys\": [

\"${S3_BASE_PATH}/inputs.zip\"

]

}" \

https://cloud.pix4d.com/project/api/v3/projects/$PROJECT_ID/inputs/bulk_register/

- Finally, continue the flow to start processing

The ZIP file exported from PIX4Dcatch will be compatible with PIX4Dcloud.

If you need to modify the ZIP file's contents however, there are certain things you should be aware of.

- It must contain a file at the root level called

manifest.json - This file must provide a semantic description of all the files in the ZIP archive, except

manifest.jsonitself - The

manifest.jsonmust conform to the JSON schema

Optionally you could also pass the list of GCPs and Marks in the zip contents with respect to the JSON schema defined for it.

The input_control_points.json file must be compliant to below rules.

- The zip bundle is completely self-consistent. It means all the Images and GCPs referenced by the Marks must be present in the zip itself.

- The

idfor each GCP must be unique. - The CRS passed in the GCPs list:

- Only EPSG format is supported

- The

definitionproperty in the CRS should have:- A string in the format

Authority:code+codewhere the first code is for a 2D CRS and the second one if for a vertical CRS (e.g.EPSG:4326+5773). - A string in the form

Authority:code+Auhority:codewhere the first code is for a 2D CRS and the second one if for a vertical CRS (e.g.EPSG:4326+EPSG:5773). - A string in the form

Authority:codewhere the code is for a 2D or 3D CRS (e.g.EPSG:4326).

- A string in the format

- In CRS, optional

geoid_heightcan be passed. Please note thatgeoidis not supported in the current schema. - All GCPs must contain the same CRS

- If the output CRS is not already set, the GCP CRS will be used as the output CRS

- If the output CRS is already set using the processing_options endpoint, the GCP coordinates will be transformed in to the output CRS

- If the output CRS is already set in the project

coordinate_systems, it will give an validation error.

If any of these requirements are not met then your project will not process and will be marked as errored.

The example of the zip file layout will look like:

├── input_control_points.json

├── logs

│ ├── log.json

│ └── test

│ └── abc.txt

├── manifest.json

├── new_sample

│ └── new

│ └── sample_image4.jpg

├── sample

│ └── sample_image3.jpg

├── sample_image1.jpg

└── sample_image2.jpg

The manifest.json from this zip file will contain:

{

"inputs": [

"sample_image1.jpg",

"sample_image2.jpg",

"sample/sample_image3.jpg",

"new_sample/new/sample_image4.jpg"

],

"log_files": [

"logs/log.json",

"logs/test/abc.txt"

],

"input_control_points": "input_control_points.json"

}

and the input_control_points.json from this zip file will have the list of all GCPs and the Marks as below.

{

"format": "application/opf-input-control-points+json",

"version": "0.2",

"gcps": [

{

"id": "gcp0",

"geolocation": {

"crs": {

"definition": "EPSG:4265+EPSG:5214",

"geoid_height": 14

},

"coordinates": [

1,

2,

3

],

"sigmas": [

5,

5,

10

]

},

"marks": [

{

"photo": "sample_image1.jpg",

"position_px": [

458,

668

],

"accuracy": 1.0

}

],

"is_checkpoint": true

}

],

"mtps": []

}

Once the project has been processed, a user can retrieve various data which are stored on the servers.

- Single files: any output, report, or input image can be requested individually

- ZIP files: All of the input images and available outputs and reports can be requested together in a ZIP file

1. Get "s3_key" and "s3_bucket" of the file which you want to retrieve

- Possible outputs and reports

GET on https://cloud.pix4d.com/project/api/v3/projects/{id}/outputs/

The response body will include the s3_key and s3_bucket of all of the output types. An example bellow shows the output point_cloud :

{

"result_type": "point_cloud",

"output_type": "point_cloud",

"availability": "done",

"s3_key": "user-123123123123123123123123/project-741581/test_3dmaps/2_densification/point_cloud/test_3dmaps_group1_densified_point_cloud.las",

"s3_bucket": "prod-pix4d-cloud-default",

"s3_region": "us-east-1",

"output_id": 147817230

}

A list of the main outputs which can be obtained is shown below:

| Result type | Output type | Description |

|---|---|---|

| ortho | ortho | Transparent orthomosaic in TIFF format |

| ortho | ortho_rgba_bundle | ZIP file containing transparent orthomosaic in TIFF format, .prj and .tfw files |

| ortho | ortho_rgb | Opaque orthomosaic in TIFF format |

| ortho | ortho_rgb_bundle | ZIP file containing opaque orthomosaic in TIFF format, .prj and .tfw files |

| ortho | ortho_cloud_optimized | Ortho in Cloud-Optimized Geotiff format |

| ortho | ortho_cloud_optimized_display | Ortho in Cloud-Optimized GeoTIFF format in Web Mercator CRS |

| dsm | dsm | Digital Surface Model (DSM) in TIFF format |

| dsm | dsm_cloud_optimized | DSM in Cloud-Optimized Geotiff format |

| dsm | dsm_cloud_optimized_display | DSM in Cloud-Optimized GeoTIFF format in Web Mercator CRS |

| point_cloud | point_cloud | Generated point cloud in LAS or LAZ format |

| point_cloud_slpk | point_cloud_slpk | Generated point cloud in SLPK format |

| 3d_mesh_obj | 3d_mesh_obj_zip | ZIP file containing .obj, .mtl, and .jpg files |

| 3d_mesh_obj | 3d_mesh_fbx | 3Dmesh in FBX format |

| 3d_mesh_obj | b3dm_js | 3Dmesh in Cesium format (index file) |

| 3d_mesh_slpk | 3d_mesh_slpk | 3Dmesh in SLPK format |

| ndvi | ndvi | Generated NDVI layer in TIFF format |

| quality_report | quality_report | PDF file with information about the process |

| xml_quality_report | xml_quality_report | Quality Report in XML format |

| mapper_log | mapper_log | Log file of the process in text format |

| opf_project | opf_project | OPF project document |

Notes: Depending on the processing options and type of processing used, some outputs might not be generated.

- Input images

GET on https://cloud.pix4d.com/project/api/v3/projects/{id}/photos/

The response body will include the s3_key and s3_bucket of all of the input images. An example bellow shows the image IMG_4082.JPG:

{

"id": 165965565,

"s3_key": "user-105e0ece-f221-467e-bab0-de5fbf004b61/project-741581/images/IMG_4082.JPG",

"thumbs_s3_key": {

"legacy_png_512": "user-105e0ece-f221-467e-bab0-de5fbf004b61/project-741581/photo_thumbnails/images/IMG_4082_thumb.png"

},

"s3_bucket": "prod-pix4d-cloud-default",

"width": 4000,

"height": 3000,

"excluded_from_mapper": null

}

2. Get the files from AWS S3

It is recommended to use the shell AWS CLI or the python boto3 library but other tools can work as well. First, retrieve the AWS S3 credentials associated with this project:

GET on https://cloud.pix4d.com/project/api/v3/projects/{ID}/s3_credentials/

The response contains all the S3 information we need, at least the access_key, secret_key and the session_token.

{

"access_key": "ASIATOCJLBKSU2CVJIHR",

"secret_key": "5OGGBSvn8Sesdu8l...<remainder of the secret key>",

"session_token": "FwoGZXIvYX...<remainder of security token>",

"expiration": "2021-05-10T21:55:47Z",

"bucket": "prod-pix4d-cloud-default",

"key": "user-199a56ab-7ac6-d6d1-4778-5b4d338fc9de/project-883349",

"server_time": "2021-05-19T09:55:47.357641+00:00",

"region": "us-east-1",

"is_bucket_accelerated": true,

"endpoint_override": "s3-accelerate.amazonaws.com"

}

The S3 credentials can be stored in our environment, so that they will get picked up by the AWS CLI tool.

export AWS_ACCESS_KEY_ID=ASIATOCJLBKSU2CVJIHR

export AWS_SECRET_ACCESS_KEY='5OGGBSvn8Sesdu8l...<remainder of the secret key>'

export AWS_SESSION_TOKEN='AQoDYXdzEJr...<remainder of security token>'

export AWS_REGION='us-east-1'

export AWS_S3_USE_ACCELERATE_ENDPOINT=true

Once the credentials have been set, the copy command can be run to get an specific file using its unique "s3_key" and "s3_bucket":

aws s3 cp s3://${S3_BUCKET}/${S3_KEY} ./

As an example, in order to get the point cloud from the example above, install AWS CLI and run the following:

aws s3 cp s3://prod-pix4d-cloud-default/user-123123123123123123123123/project-741581/test_3dmaps/2_densification/point_cloud/test_3dmaps_group1_densified_point_cloud.laz ./

It would copy the test_3dmaps_group1_densified_point_cloud.laz file from AWS S3 to your working directory.

This is the Open Photogrammetry Format from processing the project on PIX4Dcloud.

This can be read by PIX4Dmatic, and also using tooling such as pyopf.

The output type opf_project points to the project.opf top level file. This file then references all the

other files in the project with relative paths, allowing you to download them as needed, using the same

S3 credentials for a Project as in the above examples.

Once you have downloaded the project.opf you can use pyopf to discover the files it references:

from pyopf import io

from pyopf import project as opf

from pyopf import resolve

index_file = "/some/directory/structure/project.opf"

pix4d_project: opf.Project = io.load(index_file)

references = [resource.uri for item in pix4d_project.items for resource in item.resources]

objects = resolve.resolve(pix4d_project)

images = [camera.uri for camera_list in objects.camera_list_objs for camera in camera_list.cameras]

print("Prepend the following with your Project S3 prefix and download them:")

print(objects)

print(images)

Notes:

- This is only available for non-deprecated photogrammetry pipelines, and only for projects processed after April 2025.

You can then open the project.opf in PIX4Dmatic or perform further analysis on the OPF documents.

Input images

GET on https://cloud.pix4d.com/project/api/v3/projects/{id}/inputs/zip/

An email will be sent containing a URL to download a ZIP file with all of the input images.

Outputs

GET on https://cloud.pix4d.com/project/api/v3/projects/{id}/outputs/zip/

An email will be sent containing a URL to download a ZIP file with all of the outputs.

It is possible to upload 2D assets generated by PIX4D or third party software, for display in PIX4Dcloud, but only if some strict criteria on the properties of these files is followed.

In the PIX4Dengine Cloud API assets can be predominantly thought of as being one of:

- Downloadable

- Visualizable

The difference is generally that the Downloadable assets are in a format for continuing the client's workflow in a third party software (QGIS/BIM etc) and generally a single file. Whereas the Visualizable assets are in a level-of-detail format, often containing multiple files, and designed for viewing in PIX4Dcloud.

Each of the different assets are described by a different output_type. PIX4D will generally not

verify that the contents of the Output have the desired properties as this can be complex,

resource intensive and expensive, it is up to the integrator to provide the correct data.

When working with data produced by PIX4D products (PIX4Dengine Cloud/PIX4Dcloud/PIX4Dmatic etc) the data will be pushed to PIX4Dcloud in both formats.

In the case of 2D assets this primarily means GeoTIFFs.

There are various flavours of open public GeoTIFF specifications that PIX4D utilises to ensure a good user experience when viewing and also compatibility when exporting to third party software.

Due to backwards compatibility, we allow uploading only the downloadable GeoTIFF that we then post-process to make it visualizable. However, we only allow limited processing resources for this action, so the maximum file size that we can handle is limited, and the processing will be aborted if the file is too large or the data too complex.

For GeoTIFFs this means that the GeoTIFF (ortho or DSM) is in the coordinate reference system specified in the Project.

If you are providing the GeoTIFF, ensure that you create the Project with the same coordinate reference system.

Since this is not displayed, but simply made available for users to download, we do not check the data contained within.

Output types for these are:

dsmortho

This is used by PIX4Dcloud's interface to display the 2D view.

The file must be a Cloud Optimised GeoTIFF (COG), and must be in the Web Mercator coordinate reference system, so that is can be displayed over our base maps without being transformed.

There are open source tools, such as GDAL, that can be used to convert GeoTIFFs in the Project coordinate reference system into COGs in Web Mercator.

Output types for these are:

dsm_cloud_optimized_displayortho_cloud_optimized_display

To allow for uploading (almost) unlimited file sizes for display and download, we require that you register the output types in the same request, which allows the system to understand that these are two representations of the same underlying data, and do not require additional processing.

Once you have uploaded the files to the Project's S3, you can register the S3 keys with the Project's Output API:

POST project/api/v3/project/{id}/outputs/bulk_register/

{

"outputs": [

{

"output": "projedt-1111/dir1/dir2/my_ortho.tiff",

"output_type": "ortho"

},

{

"output": "project-1111/dir3/dir4/ortho_cog.tiff",

"output_type": "ortho_cloud_optimized_display"

}

]

}

This feature allows embeding the editor in a iframe, (both 2D and 3D view). Please contact your sales representative to enable this feature. The user must specify the domain(s) where the PIX4Dcloud editor iframe will be embedded.

The feature works only with shared sites and datasets (information about share links can be found in this support article). It means that first, it is necessary to generate a token for your project (dataset) or project group (site).

Read-Only Token Permission

Example request:

curl --request POST \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header 'Authorization: **SECRET**' \

--data \

'{ \

"enabled": true, \

"write": false, \

"type": "Project", \

"type_id": 1234 \

}' \

https://cloud.pix4d.com/common/api/v3/permission-token/

Example response:

{

"token": "d8dfbe6d-93b1-4bca-af40-5c469e3530da",

"enabled": true,

"write": false,

"type": "Project",

"type_id": 1234,

"creation_date": "2021-06-25T10:17:06.734910+02:00"

}

Read and Write Token Permission

Example request:

curl --request POST \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header 'Authorization: **SECRET**' \

--data \

'{ \

"enabled": true, \

"type": "Project", \

"type_id": 1234 \

}' \

https://cloud.pix4d.com/common/api/v3/permission-token/

Example response:

{

"token": "05a114e1-804f-4e6c-a094-5d3eb80d2119",

"enabled": true,

"write": true,

"type": "Project",

"type_id": 1234,

"creation_date": "2021-06-25T10:17:06.734910+02:00"

}

The generated token will have a shape of an uuid v4 (xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx)

Note: Revoking (disabling) the share link, will also disable your embedded editor. To do that, set 'enabled' to 'false'

curl --request PATCH \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header 'Authorization: **SECRET**' \

--data \

'{ \

"enabled": false, \

"type": "Project", \

"type_id": 1234 \

}' \

https://cloud.pix4d.com/common/api/v3/permission-token/

Embedding should be done through an iframe tag (HTML iframe tag)

As an example:

<iframe

src="https://embed.pix4d.com/cloud/default/dataset/256164/map?shareToken=b77fa15a-14ff-4c6e-b7eb-da05bad16bb2&lang=fr&theme=light"

referrerpolicy="strict-origin-when-cross-origin"

allow="geolocation"

frameborder="0"

width="100%"

height="100%"

allowfullscreen

></iframe>

Required Properties

srcan embed url to your site/dataset. In “URL templates” section you’ll learn how to build different urls for your usersreferrerpolicy="strict-origin-when-cross-origin"it’s a minimal permission that lets us determine if white label is allowed to be displayed in the domain

Optional Properties

allow="geolocation" in order to let users use the geolocation feature of the editor.

frameborder, width, height recommended setup to make the editor seem seamless.

1. Single datasets

https://embed.pix4d.com/cloud/default/dataset/:dataset_id:/:view?:?shareToken={share_token}

dataset_id id of the dataset

view? optional parameter that can be either map or model.

- if nothing is passed, then we will decide which view is the best for that particular dataset

- map forces map view

- model forces model view

share_token the generated share token in the “Manage share link” step

2. Site

https://embed.pix4d.com/cloud/default/site/:site_id:/dataset/:dataset_id:/:view?:?shareToken=:share_token

site_id: id of the site

dataset_id: id of the dataset

view?: optional parameter that can be either map or model.

- if nothing is passed, then we will decide which view is the best for that particular dataset

mapforces map viewmodelforces model view.share_tokenthe generated share token in the “Manage share link” step

By defining additional query parameters, you can also define language and a theme

theme can be either light or dark. By default it’s dark.

lang en-US or ja or ko or it or es. By default it’s en-US.

For example, to get the embedded editor in french with them set to light, it would be:

https://embed.pix4d.com/cloud/default/dataset/256164/map?shareToken=b77fa15a-14ff-4c6e-b7eb-da05bad16bb2&lang=fr&theme=light

To test your local website before publishing to your domain, you can run up a server that is on

any port and known locally to your machine as localhost.

For example, if you have the iframe HTML code from the example above in an index.html file,

then could run the python command to bring up a minimal webserver:

python -m http.server 8000

Then in your local web browser navigate to localhost:8000/index.html you can test the embed view.

Opening the index.html file without going through a webserver on localhost will fail with a

403: You are not authorized to access this website due to the Referer header not specifying an

allowed domain.

Only localhost:* is available to new clients to test.

If you wish to deploy it to your own domain you must reach out to your Sales representative in PIX4D and supply the domain names you plan to host the embed on (for example domains for staging and production systems, so you can test before deploying), otherwise you will receive a 403 error.

The Annotation API allows for programmatic management of annotations, this allows the API to user to do the following operations:

Creating annotations

curl --location --request POST 'https://api.webgis.pix4d.com/v1/annotations/' \

--header 'Authorization: Bearer <insert JWT here>'

--header 'Content-Type: application/json' \

--data-raw '{

"annotations": [

{

"entity_type": "Project",

"entity_id": 123456,

"properties": {

"name": "My annotation",

"color": "#FFFFFF80",

"description": "My first annotation"

},

"geometry": {

"coordinates": [

2.38,

57.322,

0

],

"type": "Point"

}

},

{

"entity_type": "Project",

"entity_id": 123456,

"properties": {

"name": "My annotation",

"color": "#FFFFFF80",

"description": "My second annotation"

},

"geometry": {

"coordinates": [

3.49,

68.433,

0

],

"type": "Point"

}

}

]

}'

The successful response will have 201 CREATED code and return annotation id in the body. E.g.

A successful response will have a status of 201 CREATED and a body similar to what is listed below.

{

"annotations": [

{

"annotation_id": "Project_123456_62063898-531b-4389-93f9-ed5126338ff3",

"success": true

},

{

"annotation_id": "Project_697180_82f70b94-773c-47db-80dd-5576e569548f",

"success": true

}

]

}

The example above will have created two point in the project coordinate system, to visualise the annotation go to Pix4dCloud.

List annotations

curl --location --request GET 'https://api.webgis.pix4d.com/v1/annotations/?entity_type=Project&entity_id=697180' \

--header 'Authorization: Bearer <INSERT_JWT_HERE>'

A successful response will look something like this:

{

"results": [

{

"version": "1.0",

"entity_id": 697180,

"entity_type": "Project""id": "Project_697180_31c1e01d-39a9-4926-9054-87934cee3c69",

"created": "2022-05-17T08:16:49.669183+00:00",

"modified": "2022-05-17T08:16:49.669186+00:00",

"tags": [ ...

],

"geometry": { ...

},

"properties": {

"visible": true,

"camera_position": [ ...

]

"description": "Description",

"volume": { ...

},

"color_fill": "#00224488",

"name": "Annotation 0",

"color": "#00224488"

},

"extension": { ...

},

},

{

"version": "1.0",

"entity_id": 697180,

"entity_type": "Project""id": "Project_697180_4c8ac186-5427-46b5-8347-c1ee374fd10f",

"created": "2022-05-17T08:16:49.670391+00:00",

"modified": "2022-05-17T08:16:49.670393+00:00",

"tags": [ ...

],

"geometry": { ...

},

"properties": {

"visible": true,

"camera_position": [ ...

],

"description": "Description",

"volume": { ...

},

},

"extension": { ...

}

},

]

}

Deleting annotations

curl --location --request DELETE 'https://api.webgis.pix4d.com/v1/annotations/' \

--header 'Authorization: Bearer <INSERT JWT HERE>' \

--header 'Content-Type: application/json' \

--data-raw \

'{

"annotations": [

"Project_123456_12345678-1234-1234-1234-123456789abc",

"Project_654321_fedcba98-fedc-fedc-fedc-fedcba987654",

]

}'

A successful response will look something like this:

{

"annotations": [

{

"success": true,

"annotation_id": "Project_123456_12345678-1234-1234-1234-123456789abc",

},

{

"success": true,

"annotation_id": "Project_654321_fedcba98-fedc-fedc-fedc-fedcba987654",

}

]

}

Here we will guide you through using Folders to organize your Datasets (Projects) and Sites (ProjectGroups), then moving resources within the organization, and finally navigating the resulting "resource tree" by listing or searching content.

Folders can be used, not only to organize content, but also to organize permissions as access to a Folder (also Projects and Project Groups) can be limited to certain users.

POST to *https://cloud.pix4d.com/common/api/v4/folders/

specifying a name and a parent using parent_type and parent_uuid. parent_type must be one of organization

or folder...

curl --request POST \

--url https://cloud.pix4d.com/common/api/v4/folders/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header "Content-Type: application/json"

--data '{

"name": "Customer ABC",

"parent_uuid": "f6dfd584-c4a2-43ae-9e61-672b7d1f5058",

"parent_type": "organization"

}'

This will return the information about the Folder you have created, including it's uuid.

{

"uuid": "65f7d6ab-2e46-4b30-8a3a-38fdaf54307a",

"name": "Customer ABC"

}

To rename a Folder you can PATCH it to update the name field.

curl --request PATCH \

--url https://cloud.pix4d.com/common/api/v4/folders/65f7d6ab-2e46-4b30-8a3a-38fdaf54307a/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header 'content-type: application/json' \

--data '{

"name": "Customer ABC Ltd"

}'

The return value confirms the updated name.

{

"name": "Customer ABC Ltd"

}

To remove a Folder you can DELETE. The Folder will be deleted along with all its descendants in the resource

tree.

curl --request DELETE \

--url https://cloud.pix4d.com/common/api/v4/folders/65f7d6ab-2e46-4b30-8a3a-38fdaf54307a/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}"

To create a Project (or Project Group) inside a Folder, simply add a parent_type and parent_uuid to the body of the

request.

curl --request POST \

--url https://cloud.pix4d.com/project/api/v3/projects/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header 'content-type: application/json' \

--data '{

"name": "Project 6",

"parent_uuid": "65f7d6ab-2e46-4b30-8a3a-38fdaf54307a",

"parent_type": "folder"

}'

Once you have created Folders within your organization you may want to move existing Projects and Project Groups (or other Folders) into them. To do this you can POST to https://cloud.pix4d.com/common/api/v4/drive/move_batch/

curl --request PUT \

--url https://cloud.pix4d.com/common/api/v4/drive/move_batch/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}" \

--header 'content-type: application/json' \

--data '{

"owner_uuid": "58101312-de35-4a22-a951-943eba20a041",

"source_nodes": [

{

"type": "project_group",

"uuid": "d9c5bec2-e11f-4371-8640-3d1534e8e3b2"

},

{

"type": "folder",

"uuid": "bee32b9e-360c-404b-81d3-7a44ff88eb66"

},

{

"type": "project",

"uuid": "1ec0ba95-b16a-4ee5-9353-511ae7e46778"

}

],

"target_type": "folder",

"target_uuid": "65f7d6ab-2e46-4b30-8a3a-38fdaf54307a"

}'

Now you have Projects and Project Groups arranged within Folders, you will find it useful to list these resources according to their location. The list endpoint of the Drive allows you to list resources within a given Folder or those at the "root" of the organization.

curl --request GET \

--url 'https://cloud.pix4d.com/common/api/v4/drive/folder/e8f6731b-dc00-4e27-afb9-2740fb76c843/' \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}"

The paginated response will look like this:

{

"count": 4,

"next": null,

"previous": null,

"results": [

{

"legacy_id": 97389,

"uuid": "72cd5e09-38a6-4490-b8e7-97486f01e45d",

"type": "folder",

"date": "2025-02-21T13:45:24.978372+01:00",

"name": "Vancouver",

"metadata": null

},

{

"legacy_id": 97388,

"uuid": "a2c58c0f-861f-4240-b646-f35290fc81eb",

"type": "folder",

"date": "2025-02-21T13:45:00.906934+01:00",

"name": "Toronto",

"metadata": null

},

{

"legacy_id": 440328,

"uuid": "50df7f65-f480-4fcc-9f86-32d8d7724689",

"type": "project_group",

"date": "2025-03-06T17:25:46.890181+01:00",

"name": "Credit counter",

"metadata": null

},

{

"legacy_id": 1005051,

"uuid": "0c26579b-f2ad-4dfb-a253-a4c43dbbbf53",

"type": "project",

"date": "2025-03-06T17:23:43.384397+01:00",

"name": "CreditCounter",

"metadata": null

}

]

}

You can also search for resources within an Organization whose name matches a string.

curl --request GET \

--url 'https://cloud.pix4d.com/common/api/v4/drive/organization/58101312-de35-4a22-a951-943eba20a041/search/?q=inside' \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}"

The paginated response is the same format as for the list endpoint:

{

"count": 3,

"next": null,

"previous": null,

"results": [

{

"legacy_id": 97389,

"uuid": "72cd5e09-38a6-4490-b8e7-97486f01e45d",

"type": "folder",

"date": "2025-02-21T13:45:24.978372+01:00",

"name": "Vancouver",

"metadata": null

},

{

"legacy_id": 438767,

"uuid": "baf79c5a-c402-45c9-9bf8-411eed93507c",

"type": "project_group",

"date": "2025-02-21T13:46:02.684781+01:00",

"name": "Vancouver 2",

"metadata": null

},

{

"legacy_id": 438766,

"uuid": "65a6914f-8db8-4ba3-ac31-4d5e388beee3",

"type": "project_group",

"date": "2025-02-21T13:45:52.247435+01:00",

"name": "Vancouver 1",

"metadata": null

}

]

}

Given a particular resource (identified by its type and uuid), you can also retrieve its "path" in the resource tree, which can be used to build breadcrumbs.

curl --request GET \

--url https://cloud.pix4d.com/common/api/v4/drive/project_group/baf79c5a-c402-45c9-9bf8-411eed93507c/path/ \

--header "Authorization: Bearer ${PIX4D_ACCESS_TOKEN}"

A successful response will be in the following format:

{

"path": [

{

"uuid": "baf79c5a-c402-45c9-9bf8-411eed93507c",

"type": "project_group",

"name": "Vancouver 2"

},

{

"uuid": "72cd5e09-38a6-4490-b8e7-97486f01e45d",

"type": "folder",

"name": "Vancouver"

},

{

"uuid": "0cda2c1a-e52f-4c54-aec6-e827d09c232a",

"type": "folder",

"name": "Canada"

}

],

"owner": {

"type": "organization",

"uuid": "58101312-de35-4a22-a951-943eba20a041",

"name": "Customer ABC Ltd"

},

"more_ancestors": false

}

List projects

List projects the user can access.

Details

A public_status field is provided to clients. It can take the values CREATED,

UPLOADED, PROCESSING, DONE, ERROR.

A more detailed status can be found in the display_detailed_status field, but is

only for display, not for logic control as it reflects an internal status the name

and flow of which might change.

Filtering

It is possible to filter projects returned using query parameters:

public_statusto include a statuspublic_status_excludeto exclude a statusidto include a project idid_excludeto exclude a project idnameto include a namename_excludeto exclude a namedisplay_nameto include a display namedisplay_name_excludeto exclude a display nameproject_group_uuidto include projects in a project group

Multiple values can be used by providing the parameter multiple times with different values. If multiple parameters are used then a project has to match a value for every specified parameter in order to be returned (i.e. parameters are ANDed together).

Response

There are 2 ways to serialize a project;

- A simple serializer that returns project basic information and is fast

- A detailed serializer that returns detailed information, including some sub-objects, but that takes longer to compute.

By default, the list REST action uses the simple serializer while retrieve uses the

detailed one. You can override the serializer used by passing a serializer query

parameter with value simple or detailed.

Authorizations:

query Parameters

| page | integer A page number within the paginated result set. |

| page_size | integer Number of results to return per page. |

| project_group_uuid | Array of strings <uuid> [ items <uuid > ] Multiple values may be separated by commas. |

Responses

Response samples

- 200

{- "count": 123,

- "results": [

- {

- "embed_urls": {

- "property1": "string",

- "property2": "string"

}, - "error_reason": "string",

- "error_code": 0,

- "last_datetime_processing_started": "2019-08-24T14:15:22Z",

- "last_datetime_processing_ended": "2019-08-24T14:15:22Z",

- "s3_bucket_region": "string",

- "never_delete": true,

- "under_trial": true,

- "source": "string",

- "owner_uuid": "string",

- "credits": 0,

- "crs": {

- "definition": "string",

- "geoid_height": 0.1,

- "extensions": null,

- "identifier": "string",

- "name": "string",

- "type": "string",

- "units": {

- "property1": null,

- "property2": null

}, - "axes": {

- "property1": null,

- "property2": null

}, - "epsg": 0,

- "esri": 0,

- "wkt1": "string",

- "wkt2": "string",

- "proj_pipeline_to_wgs84": "string"

}, - "public_share_token": "c827b6b5-2f34-47ee-824e-a48b2ab6b708",

- "public_status": "string",

- "detail_url": "string",

- "image_count": 0,

- "public_url": "string",

- "acquisition_date": "2019-08-24T14:15:22Z",

- "is_geolocalized": true,

- "s3_base_path": "string",

- "display_name": "string",

- "id": 0,

- "uuid": "095be615-a8ad-4c33-8e9c-c7612fbf6c9f",

- "user_display_name": "string",

- "created_by": "string",

- "project_type": "pro",

- "project_group_id": 0,

- "create_date": "2019-08-24T14:15:22Z",

- "name": "string",

- "bucket_name": "string",

- "project_thumb": "string",

- "front_end_public_group_url": "string",

- "front_end_public_url": "string",

- "is_demo": true,

- "display_detailed_status": "string",

- "coordinate_system": "string"

}

]

}Create an empty project

Create an empty project.

name is a mandatory parameter and is limited to 100 characters (cannot contain slashes,

must not start with a dash and cannot end with whitespace).

project_type and acquisition_date are optional.

project_type can take the following values : pro, bim, model, ag.

If project_type is not provided it defaults to the preferred solution of the user as

defined in his profile

billing_model is one of CLOUD_STANDARD or ENGINE_CLOUD.

ENGINE_CLOUDis accepted only if the project is created through a public API interface on an account that has an ENGINE_CLOUD licenseCLOUD_STANDARDis accepted only if the user has a valid license with cloud allowance

Will return a 400 if the billing model is unknown or invalid for the user.

If acquisition_date is not provided (in iso-8601 format), it will default to the current

time

The coordinate system is compliant with Open Photogrammetry Format specification (OPF).

If passed, coordinate_system is either:

- a WKT string version 2 (it includes WKT string version 1)

- A string in the form Authority:code where the code is for a 2D or 3D CRS (e.g.:

EPSG:21781) - A string in the format Authority:code+code where the first code is for a 2D CRS and the second one if for a vertical CRS (e.g. EPSG:2056+5728 )

- A string in the form Authority:code+Authority:code where the first code is for a 2D CRS and the second one if for a vertical CRS.

In addition the following values are accepted for arbitrary coordinate systems:

- the value

ARBITRARY_METERSfor the software to use an arbitrary default coordinate system in meters - the value

ARBITRARY_FEETfor the software to use an arbitrary default coordinate system in feet - the value

ARBITRARY_US_FEETfor the software to use an arbitrary default coordinate system in us survey feet

If coordinate_system is passed, two optionals fields can be added:

coordinate_system_geoid_heightas Float to define the constant geoid height over the underlying ellipsoid in the units of the vertical CRS axis.coordinate_system_extensionsas JSON object with extension-specific objects. (for more information: https://pix4d.github.io/opf-spec/specification/control_points.html#crs)

Will return with a 400 error code if the coordinate_system is invalid.

Unsupported cases:

- Geographical coordinate systems (example: EPSG 4326)

- Non isometric coordinate systems (all axes must be in the same unit of measurement)

In addition please note that to process with PIX4Dmapper:

- the coordinate system has to be compatible with WKT1.

- the coordinate system should not have a vertical component.

Organization Management users should specify the 'parent' of the project by passing:

parent_type: one of: organization, project_group or folder.- organization to create a project in the drive root of an organization.

- folder and project_group are used to create projects in a specific folder or project group.

parent_uuid: The uuid of the parent.

processing_email_notification an optional parameter, it defaults to true, setting it

to false will disable all email notifications related to project processing events

(e.g. start of processing, end of processing etc.).

Authorizations: